Sequence Modeling Using a Memory Controller Extension for LSTM

Image credit: Unsplash

Image credit: UnsplashAbstract

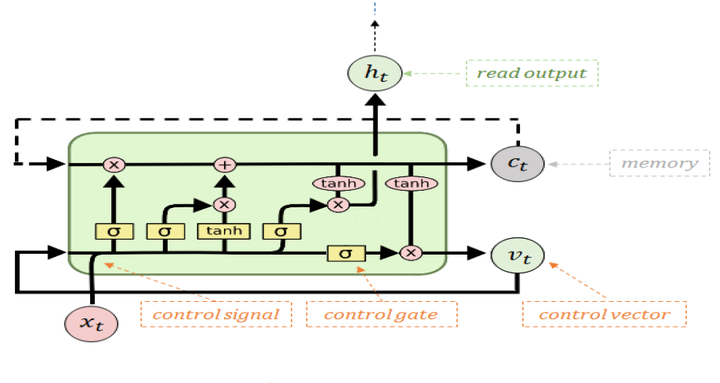

The Long Short-term Memory (LSTM) recurrent neural network is a powerful model for time series forecasting and various temporal tasks. In this work we extend the standard LSTM architecture by augmenting it with an additional gate which produces a memory control vector signal inspired by the Differentiable Neural Computer (DNC) model. This vector is fed back to the LSTM instead of the original output prediction. By decoupling the LSTM prediction from its role as a memory controller we allow each output to specialize in its own task. The result is that our LSTM prediction is dependent on its memory state and not the other way around (as in standard LSTM). We demonstrate our architecture on two time-series forecast tasks and show that our model achieves up to 8% lower loss than the standard LSTM model.